This dependency, and the destroy command lists any such dependencies, if they exist. Is created somewhere else in the dataset hierarchy, the original snapshot cannot be destroyed as long as a clone exists. When a snapshot is cloned, it creates an implicit dependency between the parent and child. Instantaneous, and initially consumes no additional space.Ĭlones can only be created from a snapshot. As with snapshots, creating a clone is nearly zfs directory can be controlled by the snapdir property.Ĭlones A clone is a writable volume or file system whose initial contents are the same as another dataset. Snapshots are automatically mounted on demandĪnd may be unmounted at regular intervals. zfs/snapshot directory in the root of the file system. Snapshots of volumes can be cloned or rolled back, but cannot be accessed independently.įile system snapshots can be accessed under the. As data within the active dataset changes, the snapshot consumes more data than would otherwise be shared with the active dataset. Snapshots can be created extremely quickly, and initially consume no additional space within the Snapshots A snapshot is a read-only copy of a file system or volume.

See zpool(1M) for more information on creating and administering pools. The physical storageĬharacteristics, however, are managed by the zpool(1M) command. The root of the pool can be accessed as a file system, such as mounting and unmounting, taking snapshots, and setting properties. A storage pool is also the root of the ZFS file system It is specified as or File System Hierarchy A ZFS storage pool is a logical collection of devices that provide space for datasets. Snapshot A read-only version of a file system or volume at a given point in time. This type of dataset should only be used under special circumstances. Volume A logical volume exported as a raw or block device.

Debian openzfs free#

Applications that depend on standardsĬonformance might fail due to nonstandard behavior when checking file system free space.

While ZFSįile systems are designed to be POSIX compliant, known issues exist that prevent compliance in some cases. Pool/ where the maximum length of a dataset name is MAXNAMELEN (256 bytes).įile system A ZFS dataset of type filesystem can be mounted within the standard system namespace and behaves like other file systems.

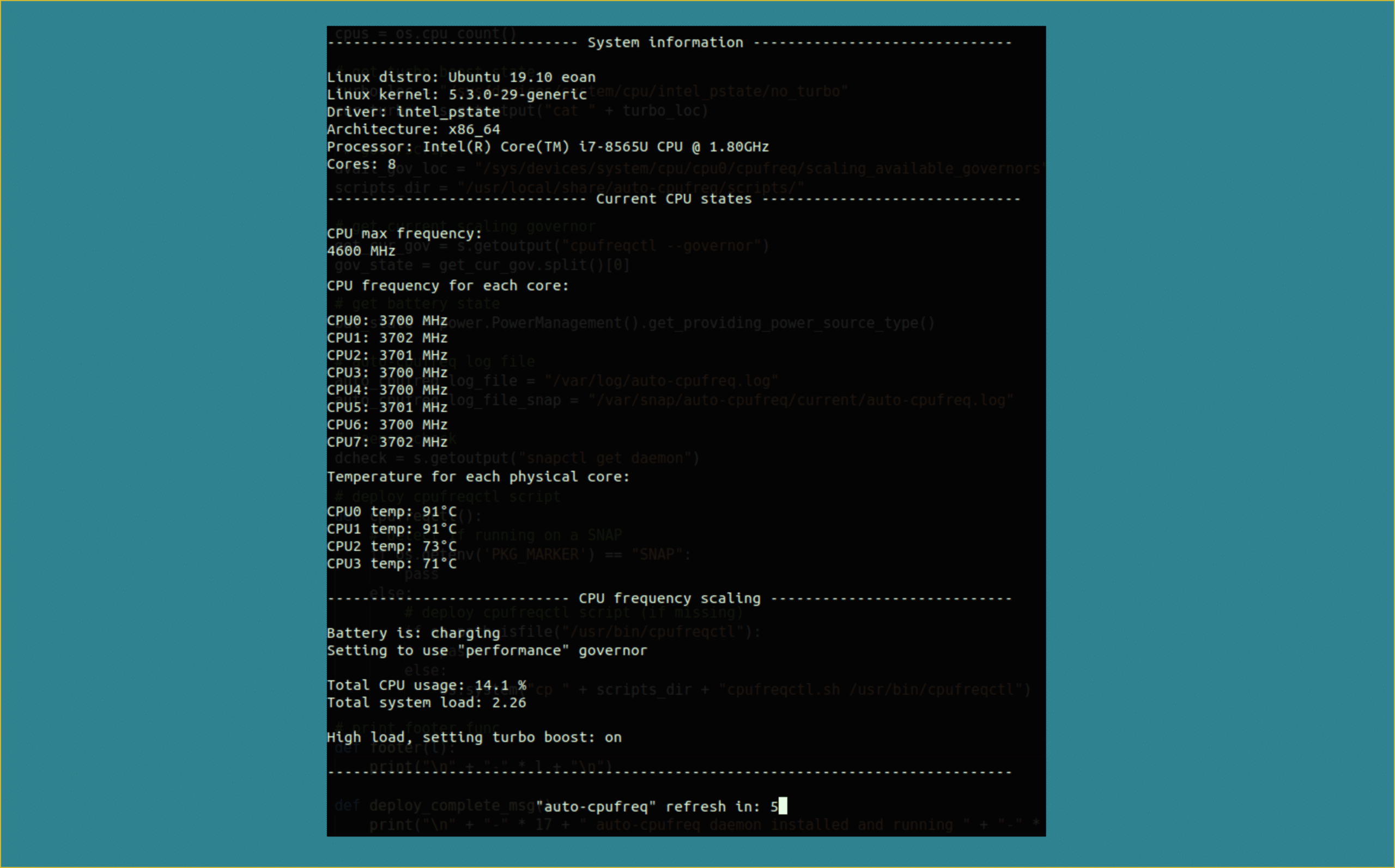

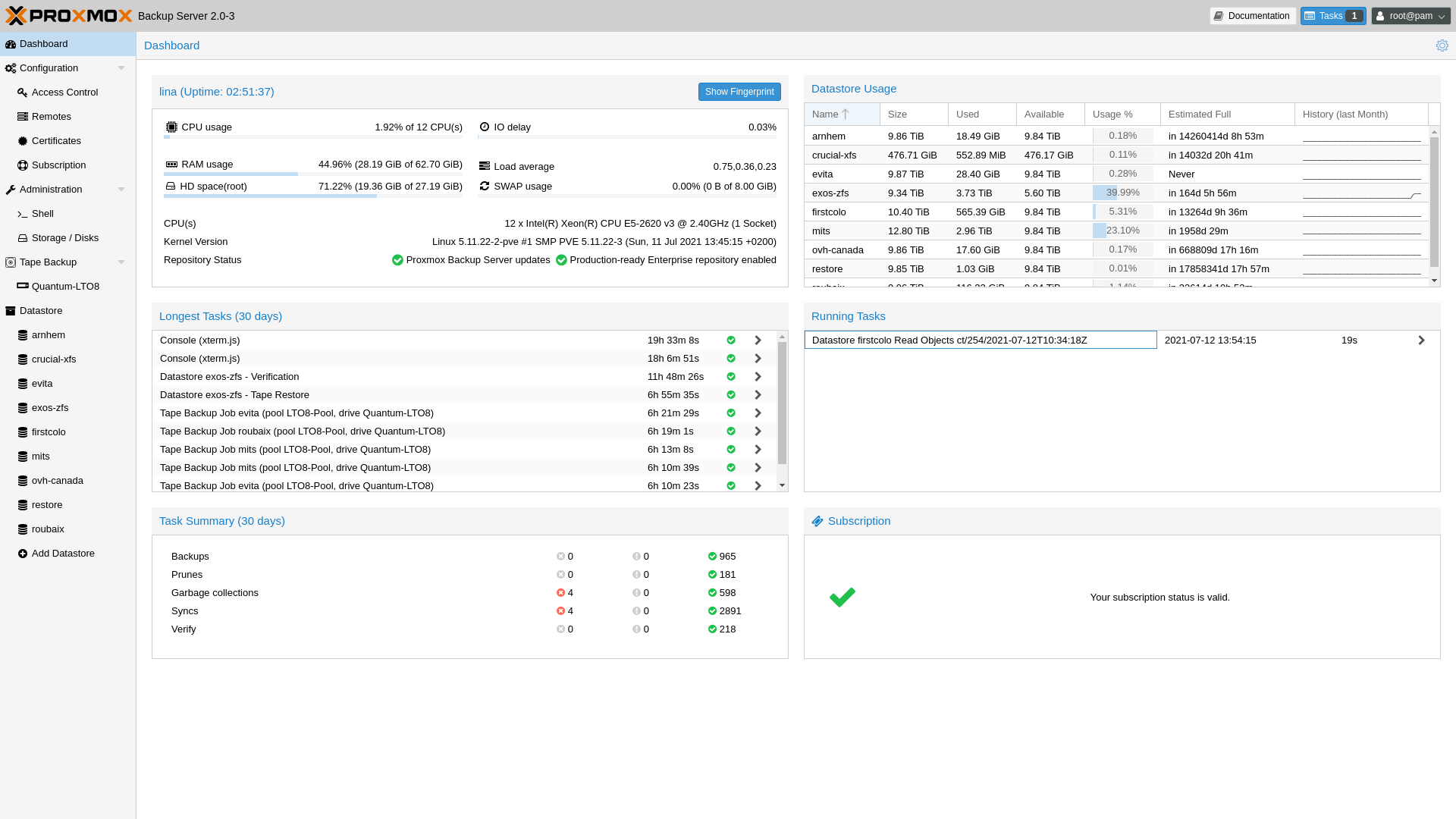

The zfs command configures ZFS datasets within a ZFS storage pool, as described in zpool(1M). Zfs unallow -s setname ] filesystem| volume Zfs allow -s setname setname filesystem| volume Zfs allow " everyone"| user| group perm| volume Zfs inherit property filesystem| volume|snapshot. Zfs get ] ]Īll | property filesystem| volume| snapshot. Zfs set property= value filesystem| volume| snapshot. Zfs rename filesystem| volume filesystem| volume It works pretty well for my 12-core system (at /etc/modprobe.d/zfs.Zfs create. Here is a suggestion that you could use and tune for your system. I need some details about your system (disk information for the ZFS pool, system memory and CPU). That is too low, hence could lead to bad read performance. On your tests, I've observed that maximum number of active threads for asynchronous operations were set to 1.

0 kommentar(er)

0 kommentar(er)